Spring Special Limited Time 70% Discount Offer - Ends in 0d 00h 00m 00s - Coupon code = getmirror

Pass the Databricks Certification Databricks-Certified-Professional-Data-Engineer Questions and answers with ExamsMirror

Exam Databricks-Certified-Professional-Data-Engineer Premium Access

View all detail and faqs for the Databricks-Certified-Professional-Data-Engineer exam

771 Students Passed

92% Average Score

94% Same Questions

An upstream system has been configured to pass the date for a given batch of data to the Databricks Jobs API as a parameter. The notebook to be scheduled will use this parameter to load data with the following code:

df = spark.read.format("parquet").load(f"/mnt/source/(date)")

Which code block should be used to create the date Python variable used in the above code block?

The data architect has mandated that all tables in the Lakehouse should be configured as external (also known as "unmanaged") Delta Lake tables.

Which approach will ensure that this requirement is met?

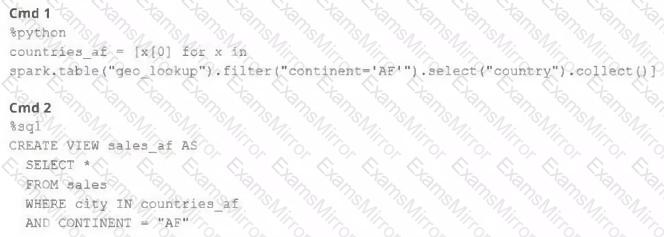

A junior member of the data engineering team is exploring the language interoperability of Databricks notebooks. The intended outcome of the below code is to register a view of all sales that occurred in countries on the continent of Africa that appear in thegeo_lookuptable.

Before executing the code, runningSHOWTABLESon the current database indicates the database contains only two tables:geo_lookupandsales.

Which statement correctly describes the outcome of executing these command cells in order in an interactive notebook?

The Databricks CLI is use to trigger a run of an existing job by passing the job_id parameter. The response that the job run request has been submitted successfully includes a filed run_id.

Which statement describes what the number alongside this field represents?

A Delta Lake table representing metadata about content from user has the following schema:

user_id LONG, post_text STRING, post_id STRING, longitude FLOAT, latitude FLOAT, post_time TIMESTAMP, date DATE

Based on the above schema, which column is a good candidate for partitioning the Delta Table?

The marketing team is looking to share data in an aggregate table with the sales organization, but the field names used by the teams do not match, and a number of marketing specific fields have not been approval for the sales org.

Which of the following solutions addresses the situation while emphasizing simplicity?

In order to prevent accidental commits to production data, a senior data engineer has instituted a policy that all development work will reference clones of Delta Lake tables. After testing both deep and shallow clone, development tables are created using shallow clone.

A few weeks after initial table creation, the cloned versions of several tables implemented as Type 1 Slowly Changing Dimension (SCD) stop working. The transaction logs for the source tables show that vacuum was run the day before.

Why are the cloned tables no longer working?

Where in the Spark UI can one diagnose a performance problem induced by not leveraging predicate push-down?

A Databricks job has been configured with 3 tasks, each of which is a Databricks notebook. Task A does not depend on other tasks. Tasks B and C run in parallel, with each having a serial dependency on Task A.

If task A fails during a scheduled run, which statement describes the results of this run?

Which statement describes Delta Lake Auto Compaction?

TOP CODES

Top selling exam codes in the certification world, popular, in demand and updated to help you pass on the first try.